AMD: Will More CPU Cores Always Mean Better Performance?

The company that helped inaugurate the multicore era of CPUs has begun studying the question, will more cores always yield better processing? Or is there a point where the law of diminishing returns takes over? A new tool for developers to take advantage of available resources could help find the answers, and perhaps make 16 cores truly feel more powerful than eight cores.

Two years ago, at the onset of the multicore era, testers examining how simple tasks took advantage of the first CPUs with two on-board logic cores discovered less of a performance boost than they might have expected. For the earliest tests, some were shocked to discover a few tasks actually slowed down under a dual-core scheme.

While some were quick to blame CPU architects, it turned out the problem was the way software was designed: If a task can't be broken down, two or four or 64 cores won't be able to make sense of it, and you'll only see performance benefits if you try to do other things at the same time.

So when AMD a few months back debuted the marketing term "mega-tasking," defining it to refer to the performance benefits you can only really see when you're doing a lot of tasks at once, some of us got skeptical. Maybe the focus of architectural development would be diverted for awhile to stacking tasks atop one another, rather than streamlining the scheme by which processes are broken down and executed within a logic core.

Today, AMD gave us some substantive reassurance with the announcement of what's being called lightweight profiling (LWP). The idea is to give programmers new tools with which to aid a CPU (specifically, AMD's own) in how best their programs can utilize their growing stash of resources. In a typical x86 environment, CPUs often have to make their own "best guesses" about how tasks can be split up among multiple cores.

Low-level language programmers do have compiler tools available to them that can help CPUs make better decisions, but they don't often choose to use them. So as CPU testers often discover, many tasks that ran on one core before, continue to run on one core today.

"We think the hardware needs to work together with the software, to enable new ways to achieve parallelism," remarked Earl Stahl, AMD's vice president for software engineering, in an interview with BetaNews. "Lightweight Profiling is what we're going to be releasing as a first step in this direction."

"We think the hardware needs to work together with the software, to enable new ways to achieve parallelism," remarked Earl Stahl, AMD's vice president for software engineering, in an interview with BetaNews. "Lightweight Profiling is what we're going to be releasing as a first step in this direction."

As Stahl explained to us, software that truly is designed to take advantage of multiple cores will set up resources intentionally for that purpose: for example, shared memory pools, which a single-threaded process probably wouldn't need. But how much shared memory should be established? If this were an explicit multithreading environment like Intel's Itanium, developers would be making educated guesses such as this one in advance, on behalf of the CPU.

So LWP tries to enable the best of both worlds, implicit and explicit parallelism: It sets up the parameters for developers to create profiles for their software. AMD CPUs can then use those profiles on the fly to best determine, based on a CPU's current capabilities and workload, how threads may be scheduled, memory may be pooled, and cache memory may be allocated.

"Lightweight Profiling is a new hardware mechanism that will allow certain kinds of software within real time to dynamically look at performance data provided by the CPU as it's executing," Stahl told us, "and then can take action on that performance data to better optimize its own processing."

AMD believes about 80% of the potential usefulness of LWP will be realized by just two software components: Sun's Java Virtual Machine, and Microsoft's .NET Runtime module. While operating system drivers will not be necessary for operating systems to take advantage of LWP, it's AMD's hope that developers who are using high-level, just-in-time-compiled languages anyway will be able to automatically benefit from LWP, at least for the most part.

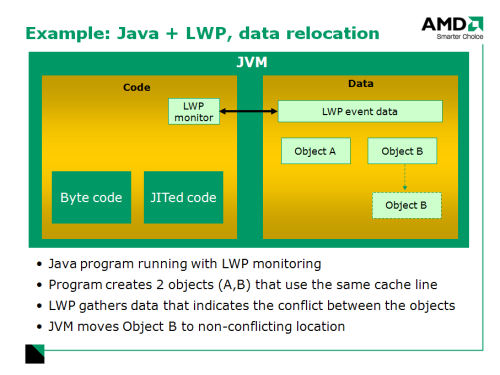

Earl Stahl shared with us an example: It involves two data objects being instantiated by the Java VM, which would normally be located in the most convenient spots available at the time. But depending upon how a multicore environment is currently being utilized, those locations that might seem convenient to Java may actually cause performance pressure points that wouldn't have been realized if Java had the system all to itself.

"As code is executing within a Java virtual machine, at some point a couple of objects [A and B] may get allocated by the memory management system within the JVM," Stahl explained. "As those objects are being accessed, periodically a JVM can use Lightweight Profiling to identify the potential hot spots, which may actually point to objects A and B actually causing a significant number of misses in the cache - which turns out to be a big performance bottleneck if that occurs. By being able to identify that dynamically, the garbage collector or heap manager can actually move one of those objects then, in response to that information, and improve the performance in a dynamic way without the higher-level applications having to know about that.

"There are significant gains to be had," he continued, "when even in this simple example where objects are conflicting at the cache level, you lose the benefit of the cache and start having to go back to the much slower memory access on each write or read from that object - it can have a significant impact on software."

Historically, when developers have been given tools that enable them to either conserve what resources there may yet be available or "go for the gusto," they've chosen the latter. This has worked against systems that try to budget or schedule times or allocation blocks appropriately, as applications all tended to shout that they deserved the highest priority.

So the question arises with respect to LWP, will this actually enable processes to be conservative, especially in the eight-core era and beyond? As we've already seen, when gigabytes of memory and data storage become cheaply available, the tendency is for applications to consume them. What's to prevent developers from turning up the volume knobs, if you will, on LWP profiles to the highest setting?

For now, AMD's Earl Stahl isn't really sure. "In general, I think your question is a good one, which is really more a question about, how will runtime and applications utilize this?" he responded. "Will it be to make a single thread run faster or to make multiple threads of execution run faster, or perhaps have a more efficient set of those? I think that will be actually driven by the software itself. It's more domain-specific, because [LWP] is not limited to either of those. It could help to achieve either one, and we're not pre-supposing which of those it would most benefit."

Later, he suggested we may just have to implement LWP first, in order for us to find the answer for ourselves. "LWP as a hardware extension - looking ahead at this new era we envision where there's some new techniques in software that have to emerge for achieving parallelism - is within those new software techniques [where] there'll be either implicit or explicit abilities to make some of those decisions," he said.

"Part of what limits things today in the world of many cores is that it is explicitly on the shoulders of the software developer to have to figure out ways to exploit those, and it becomes a level of complexity that is really just not worth the investment. So you're describing and discussing a topic that says, where do the new software techniques take us? How does the developer ensure that they can exploit appropriately whatever the available hardware is? And there are new techniques in the software ecosystem that are going to emerge, to help achieve that."